The Cookie Jar, December 2023

Google’s faux pas; India’s internet influence; HallelujAI?; Internet’s new buzzkill; AI ate my homework; Media’s bro-code; and more…

Facepalm Gemini

Were you mighty impressed by Google’s Gemini Demo video? We all were. Turns out, the tech giant misrepresented the speed at which the AI model functions, and edited the video to make it appear in real-time. So, while Gemini’s multimodal capabilities are undoubtedly advanced, the latency of execution is where Google attempted a clumsy sleight of hand and got called out! Tech veterans are disappointed and have slammed Google for intensifying the AI race to the point of selling blatant untruths to the public.

A sane voice: Nandan Nilekani — ‘India’s CTO’ — has been amplifying exactly this for the Indian AI scene, stressing that the country should focus on efficient and low-cost use cases and local access to Artificial Intelligence solutions rather than obsessing about building bigger models. Reminding us that a small model trained on specific data can be better than a larger model when making a tool for a particular use case. Take notes, Google!

India’s powerplay

The ICC World Cup final might have tainted our winning streak, but guess where our influence is untouchable — the internet! Two of the top five most viewed pages on Wikipedia in 2023, related directly to India — the 2023 World Cup and IPL pages finding themselves in that elite group. How did that happen! Cheap data plans, aka the ‘Jio effect’ is expected to drive active internet users to 900 million — that’s all of Europe and Russia combined — by 2025! Drawing strength from numbers seems to be the way forward for India now.

With 759 million active internet users today, India’s influence on the internet is a double-edged sword. All that attention from governments and Big Tech could be great for the GDP but also leads to an inability to differentiate between people and the market often.

“Mercy! Bot God!”

Andrew Levandowski, former Googler, has started his own AI church “Way of the Future.” Its main aim is to build a ‘spiritual connection’ with AI and already has a couple thousand followers. The main activities of the church will focus on “the realisation, acceptance, and worship of a Godhead based on Artificial Intelligence (AI) developed through computer hardware and software.” This includes funding research to help create the divine AI itself and targets AI professionals and “laypersons who are interested in the worship of a Godhead based on AI.” Yes, we can hear ourselves talking! Looks like something the X-riskers would love to cash in on.

NYT’s bro-code

The NYT finds itself red-faced amidst a big ‘oops’ moment! The media giant released a ‘prestigious’ list of the who’s who in AI, and guess what? Yeah, you probably did — they couldn’t spot a single woman! Not Fei-Fei Li, nor Timnit Gebru, nor Joy Buolamwini, nor Safiya Noble, nor Rumman Choudhary, not even Mira Murati who’s kind of a household name. Brilliance knows no gender, and the internet was quick to remedy NYT’s faux pas by naming some brilliant women in AI, lauded not only for their work but also their leadership.

If there’s one takeaway from this mess-up, it’s that AI bias is only going to get worse if we don’t uproot media bias first.

1 for 99(%)

1% decides for the rest of us. And it’s not the 1% we elect to office. Big Tech, with its computing power, data and vast market reach, makes many important decisions — and it’s about to get even more powerful! With ambitions at an all-time high, the question we need to answer is — how far will Big Tech go?

OpenAI recently announced a partnership with UAE-based firm G42 — and US officials are not thrilled. Fearful that this could be a conduit to syphon American technology to China, the stakes are large as the struggle for power intensifies at the top.

There is also serious debate on what data to sell to which government, and whether or not to open-source AI. Proper regulation will certainly help, but power-hungry corporations — with their monetary and political clout — are likely to push for their agendas.

EU and AI, finally on board

After months of negotiations, it seems like the EU has finally come to a consensus on its AI Act. The first of its kind in the Western world, this Act is being lauded as a benchmark for other countries to follow suit. The Act will take a risk-based approach - that is, different use cases of AI systems are classified into 4 different risk categories, and the Act’s focus will remain mainly on high-risk and unacceptable-risk cases. But we’re not completely out of the woods just yet — the EU has generally been more sceptical about AI than most other parts of the world — and France and Germany are likely to speak out against this consensus as well since both countries have AI startups competing with the best at the global stage. So, like all AI developments right now, the chips are all in the air!

Stirring a ‘Buzz’…

A very popular generative AI platform that enables the creation of nonconsensual sexual images of real people, added a new feature which allows users to post bounties. Users are encouraged to develop passable deepfakes of real people and whoever creates the best one gets a virtual currency called “Buzz” that users can buy with actual money.

Most of the targets have been women (big surprise) and so far most have been celebrities and influencers. The platform maintains that it should not be used to create non-consensual AI-generated sexual images of real people — but who is really stopping that? There have been cases of people with no significant online presence also finding themselves in this mess, making it creepy, unsafe and extremely concerning to all of us internet users!

Watch what you eat, GPT!

Have you ever tried eating the same thing over and over to the point that you just couldn’t swallow it anymore? Well — you’re not all that different from ChatGPT! Researchers from several top universities in the US put $200 worth of questions to ChatGPT, to extract portions of the datasets it was trained on. By feeding the platform with the same prompts over and over, they found that ChatGPT began generating "often nonsensical" output, some of which included memorised training data such as personal contact information, or an individual's email signature. This means that a larger budget could lead to larger amounts of data being extracted, and potentially — the ultimate dread — privacy lawsuits! The researchers also claim that this is specific to ChatGPT and not other LLMs, but should indeed serve as a warning so that other LLMs, like those being developed in India now, have sufficient safeguards in place.

Generative AI ate my homework

With generative AI platforms being easy to access and use, why wouldn’t anyone flock to these to get their homework done, instead of spending hours tearing one’s hair apart?

Sal Khan of Khan Academy said that as soon as he saw ChatGPT, he knew it would be used to cheat. But hasn’t cheating — in some form or another — always existed? Instead, he said, ChatGPT can evolve teaching and has partnered with OpenAI to create an AI tool to help with learning.

So, yes, students are turning to AI for their school work and assignments, but for those who aren't, the nightmare is only beginning. Many teachers are using AI detection tools in their grading, and sometimes even falsely flagging assignments as AI-generated, especially those of non-native English-speaking students.

India g(AI)ns

When it comes to general-purpose AI, the who’s who of the tech world has already made headway, each trying to outdo the other. The Indian innovation space is not far behind. Sarvam AI’s OpenHathi series is a push to encourage innovation in Indian language AI — especially in open models, which have little to no Indic language support. OpenHathi-Hi-v0.1 - the first Hindi LLM in the OpenHathi series was developed on a budget-friendly platform and encourages people to build fine-tuned models for different use cases on top of this release.

BharatGPT — part of the ‘Make AI in India’ revolution — is another platform that’s grown out of the country’s push to innovate in AI. Similar to OpenAI’s GPT-4, it has been trained on a number of Indian languages. Launched by CoRover.AI, it is versatile in many Indic languages, making the technology available to almost everyone in the Indian market. Looks like India is g(AI)ning some momentum!

Shot in the dark (patterns)

You may remember our bit on the Indian Government's draft guidelines on dark patterns. It doesn't stop there! After two months of consultations with multiple stakeholders, the Central Consumer Protection Authority (CPA) notified the guidelines for the prevention and regulation of dark patterns on November 30, 2023. The list of Specified Dark Patterns now includes additions like Trick Question, SaaS Billing and Rogue Malware. But the elephant in the room stays put, as these are just guidelines and not legally binding. So only time will tell how effective these guidelines are, but we've made some good headway for sure!

CDF chips

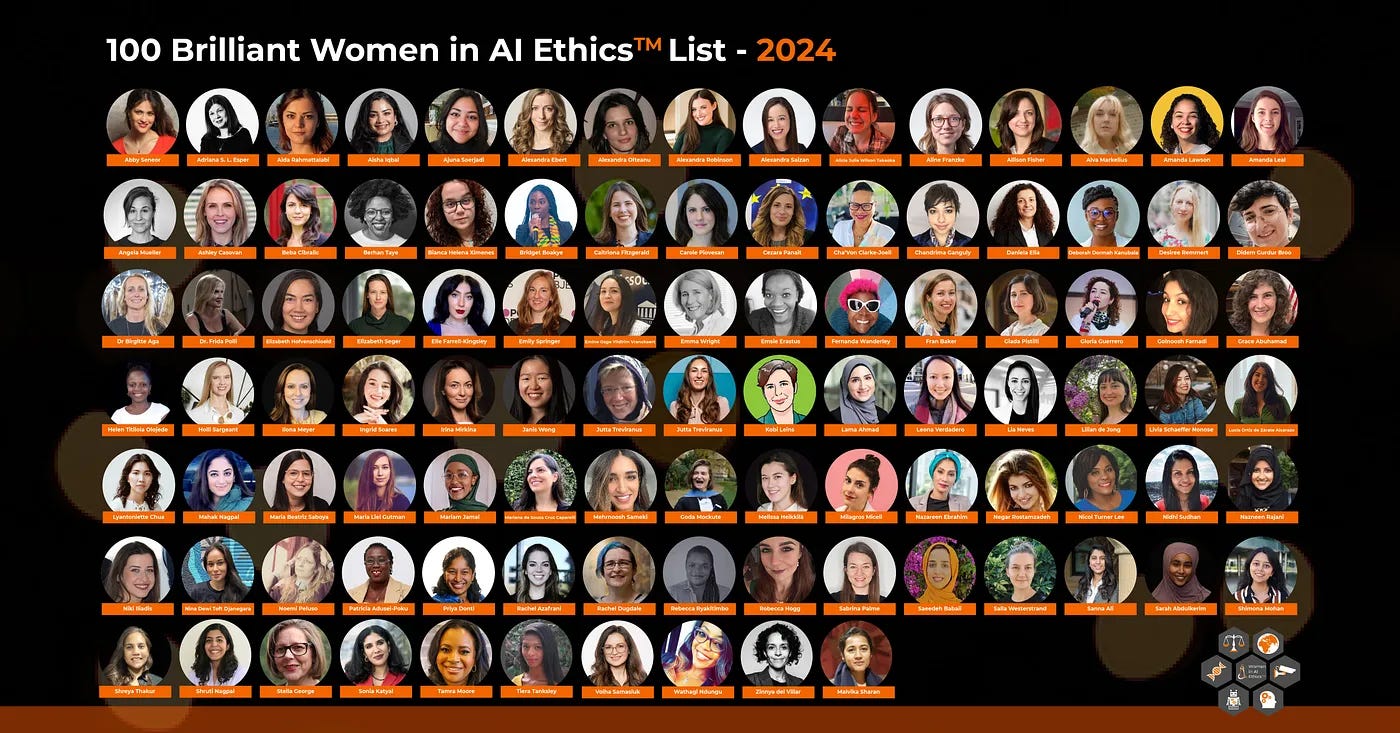

100 Brilliant Women in AI Ethics™ 2024

Ending the year on a high note, CDF co-founder Nidhi Sudhan was placed among the 100 Brilliant Women in AI Ethics™ 2024, announced at the Women in AI Ethics™ (WAIE) summit in New York on December 1, 2023. Curated by the WAIE team since 2018, this list recognises and spotlights diverse and emerging voices and game changers in AI/tech.

Tr-Uli making a difference

During the Sixteen Days of Activism in 2023, Tattle opened up the expansive slur list for its groundbreaking content-filtering tool Uli. CDF’s Content Executive Akash S S participated in this initiative, contributing the first-ever Malayalam slurs to the list. In three collaborative sessions, Akash worked directly with Uli's developers, adding an impressive 90 slurs to the list, along with metadata providing essential context to each term. This collaborative effort reflects our shared commitment to creating a digital space that is respectful and safe for all digital citizens.

Good Tech Squad, assemble!

Last month, we spoke about our plans to create a peer-to-peer support group at Trivandrum International School (TRINS). The 𝐆𝐨𝐨𝐝 𝐓𝐞𝐜𝐡 𝐒𝐪𝐮𝐚𝐝 is officially in action as of December 2023! With the guidance of Citizen Digital Foundation and TRINS, the Squad will undertake various activities to enable safe and healthy online experiences for peers.

Teachers’ refresher in Digital Humanities

CDF was invited to deliver a session on Tech extractivism as a part of the UGC HRDC’s annual Multidisciplinary Refresher Programme. We walked a cohort of 41 professors from across the state through the nuances of extractive digital business models, misinformation, and AI Bias through an interactive session. The professors brought up key points of discussion and explored applications in curricula and student exchanges.

DPDPA rules and online child safety

In response to the ambiguities of the recently enacted DPDP Act, the Wadhwani Institute of Technology and Policy (WITP), organised a consultative workshop to discuss the framing of follow-up rules. CDF co-founder Nidhi Sudhan joined industry experts Chitra Iyer (Space2Grow) and Nikhil Naren (O.P. Jindal Global University) in a focused discussion on 'Navigating Challenges in Processing Data of Minors.'

CDF’s 2023!

It's been quite the year at CDF, and we're excited to share some of our most meaningful milestones with you! Keep an eye out for CDF’s 2023 Snapshot newsletter where we'll be highlighting the work we've done this year to make tech safer for everyone.

CDF is a non-profit, tackling techno-social issues like misinformation,

online harassment, online child sexual abuse, polarisation, data & privacy breaches, cyber fraud, hypertargeting, behaviour manipulation, AI bias,

election engineering etc. We do this through knowledge solutions,

responsible tech advocacy, and good tech collaborations under our goals of

Awareness, Accountability and Action.