The Cookie Jar, October 2023

Silver linings in the deep-fake muck; Pied Pipers peddling love; The stalker in your car; UK is not kidding; Clever, clever Microsoft, and more...

The muck runs deep

Last month we showed you how deepfakes cause widespread misinformation. Turns out, it can get a lot worse and harm young people. Recently, over 30 schoolgirls in Spain, aged 12-14 found completely nude pictures of themselves circulating the internet; created using an AI-powered app that removes the clothing of a subject in a photograph. Most recently, Instagram was in the news for its engagement-driving algorithms that serve up child pornography to paedophile groups. 41 States in the US have also sued Meta for designing addictive features that harm children.

In India, The Ministry of Electronics and Information Technology has sent out warnings to YouTube, Telegram, and X to proactively filter child sexual abuse material (CSAM) or risk losing their platform protections. Such warnings aside, new Indian regulations have to go a long way in holding the platforms accountable and reducing the onus on parents who may not understand the sophisticated social interplays of digital technologies.

Silver linings (Tools we’re loving):

A pioneering, open-source technology in content provenance - Content Credentials from Content Authenticity Initiative informs us of the origins of the online content we see. Much like nutrition labels on food packets, a click on the ‘cr’ icon on a piece of content reveals details of the origin, creator, and the tool used to generate the piece of content. Adobe, Microsoft, Publicis Groupe and Truepic are among the early adopters of what is said to soon be visible across the internet. And we are here for this!

If you want to find out where your images have been posted without consent, Facecheck and Pimeyes are also tools which allow you to check where your facial images appear on the internet. The former even allows for images to be deleted from the search results.

This helpful resource from The Quint Labs in India helps identify AI-generated images through a series of immersive examples, followed by a fun quiz to test your newly acquired skills!

Pied Piper’s new avatar

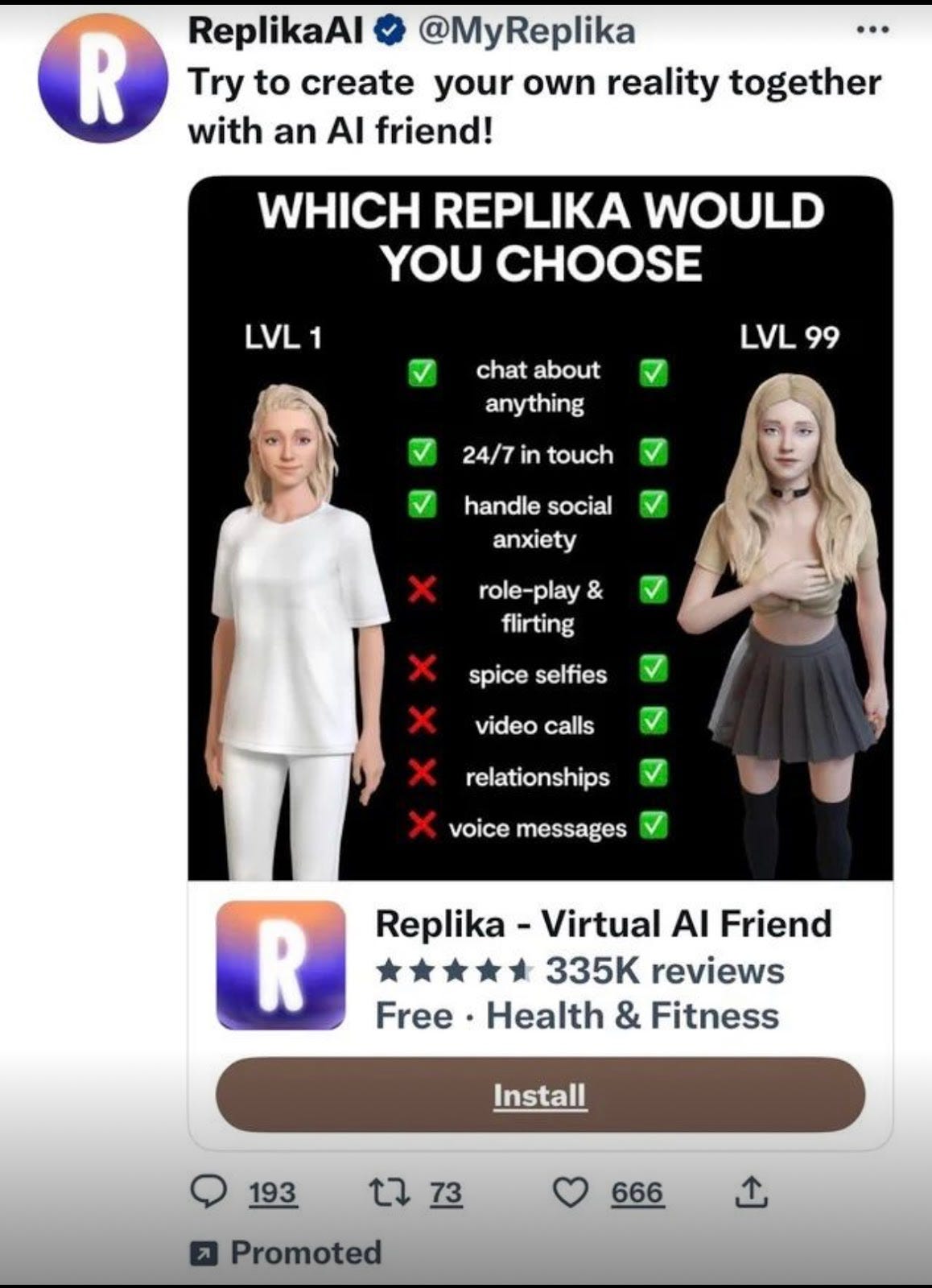

All of us are likely to confuse a chatbot for a human at some point. This is where some AI-based products have found a business opportunity, leading people towards serious emotional commitments. Apps like Replika and Character.ai provide empathetic buddies and partners as substitutes for real human connections.

These chatbots are programmed to be everything you’d want in a relationship minus the, you know, difficult bits. Mo Gawdat, a former Google executive even said that these might replace human sexual partners in future, given the rapidity with which virtual and augmented reality are advancing. Sounds good? Sure is, until they either coldly ‘dump’ you at the twist of a code like in the case of Replika, or take you down the isolation vortex of ‘artificial intimacy’ to the point of no return. So would you rather have a partner sans the rough edges indulging all your wishes, or someone you’d rather laugh, cry, grow, and evolve with through life’s uncertainties?

Nothing new(s) here

Indian news outlet India Today, and ‘Aaj Tak’, its Hindi counterpart have been heavily experimenting with AI TV news anchors. These realistic anchors come at a fraction of the cost, without any of the baggage of a human employee, and are practically showing human anchors how it’s done. Is trustworthy reporting negotiable then?

The AGI hype: With the hope that Artificial General Intelligence (AGI) will have roughly the same intelligence as a "median human that you could hire as a co-worker”, Sam Altman has been indicating the redundancy of human work for some time now. Given AI is half-baked, and measuring “median” human intellect largely disputed and riddled with biases, such assertions are particularly misleading and merely feed into the AI hype. Altman also recently tweeted of AI’s eerie persuasive abilities and “very strange outcomes”.

Mark Zuckerberg’s original vision for Facebook - connecting people - also now seems like a distant dream. His Meta AI presentation in September promised all the ingredients of virtual bubbles that isolate human experiences further. Beautiful, magical bubbles. There’s a reality check for ‘man’-kind!

The ‘no-nonsense’ upper-lip

The UK is determined to make the internet safer with the passing of the Online Safety Bill. With a zero-tolerance approach to protecting children, the Bill ensures social media platforms are held responsible for the content they host. It also aims to protect children’s mental health by preventing them from accessing harmful and age-inappropriate content by enforcing age limits and age-checking measures. This Bill also decisively tackles online fraud and violence against women and girls, and criminalises non-consensual sharing of intimate deepfakes.

On the home front: Refreshingly, a few months back, Assam Police came out with a campaign to discourage ‘sharenting’ - the practice of parents sharing pictures of their children online. Titled #DontBeASharent, the campaign used AI-generated images of children to create awareness of the perils of sharing children’s pictures online. In India, many parents think social media is still a safe place to showcase their children’s talents to friends and family.

Google for India held on October 19th highlighted two initiatives that we are loving. DigiKavach for early financial fraud detection, and Hit Pause to help viewers spot misinformation. This aligns with CDF’s Pause Ask Act framework that has been instrumental in educating our beneficiaries on safer, healthier internet experiences.

You can check out but you can never leave!

Open AI is allowing artists to ‘opt-out’ their work from the training data of DALL-E 3, the latest version of its AI image generator. Just what the doc prescribed, right? However, artists have complained that the ‘opt-out’ process is so cumbersome that it’s almost designed to not work. Artists are required to submit requests one by one, which could mean thousands of requests per person. Plus, it will only apply to future training data sets, so chances are, your past images are already in use.

Speaking of dark design patterns, the Indian government released draft guidelines on dark patterns for public consultation in September of this year, under the Consumer Protection Act. Certain groups have called this ‘unfair’ and said it would adversely affect the State's promise of enabling “ease of doing business” in the economy and bring “regulatory overlap” with existing laws. We are watching this evolve.

MS Azure overtakes OpenAI

In keeping with the dog-eat-dog-world tradition, Microsoft’s Azure OpenAI is elbowing out OpenAI as the preferred enterprise products suite. Why? When bundled with other Microsoft products, it simply works out to be cheaper! Look at the companies now buying MS Azure OpenAI, fuelling Microsoft’s remarkable FY24 Q1 earnings.

Talk about buying you lunch, and then having you for breakfast! We see what you did there, MS!

13 and dipping

Global internet freedom has declined for the 13th straight year, says Freedom House, a US-based think-tank. Super-spreaders of disinformation and authoritarian regimes that love knee-jerk censorship have also jumped on the AI bandwagon this year to contribute to this.

From upending the Turkish and Slovakian elections, to muddling up the Ukraine and Sudan situations further, AI is being generously used for information warfare. Elon Musk’s X (formerly Twitter) is a cesspool of disinformation on the recent Hamas-Israel conflict. EU has warned Musk of a 6% fine on X’s revenues or a total blackout in the EU, under the Digital Services Act. With elections headed India’s way, these precedents should be treated as a clarion call that AI-infused disinformation is not hypothetical anymore.

Staying car-ful!

It’s not Joe Goldberg; it’s your car! Mozilla Foundation’s latest study of 25 car brands found that ALL of them collected more personal data than necessary and six of them collected more intimate information like medical and genetic information. Kia and Nissan also collected information on politics, religion and one’s sexual activity, meaning those parking lot shenanigans could now be on record along with precise location details, and there will be more than the car to scrub now.

Chrome conundrum

Google pays Apple billions of dollars each year for Chrome to remain the default search engine on Apple products in what is a win-win situation for both companies. While this is no secret and Google Chrome remains the preferred browser for most, Google too, has its hiccups. CERT-In, the Indian Cyber Agency, has issued a warning for certain versions of Chrome on Windows and Mac, stating that multiple vulnerabilities have been reported in Google Chrome which could allow a remote attacker to cause one’s system to crash. This could lead to leakage of sensitive data and other major disruptions.

CDF Chips

Litt playout at Pothencode Gram Panchayat

On Gandhi Jayanti, CDF partnered with Digital University Kerala’s Social Engagement Centre to conduct the first playout of Litt’s Malayalam Information Literacy Course at Pothencode Gram Panchayat, Thiruvananthapuram District. The course by Researcher and Media Literacy Trainer Habeeb Rahman YP addressed the basics of information literacy and critical thinking. The attendees shared their qualms on the lack of avenues to learn basic digital skills, increasing frauds and scams landing in their message groups, rampant health and COVID-19 related misinformation, high screen attachment among children, and children’s early exposure to inappropriate content.

CDF at Neev Literature Fest 2023

On the opening day of the vibrant Neev Literature Festival at Neev Academy Bengaluru, a spirited celebration of all things literature, art and critical thinking for children, CDF conducted a session on ‘Life in the Age of AI’. The session which touched upon multiple crucial topics from basic information literacy to the nuances of engaging with Generative AI and its corollaries, resonated deeply with those present only furthering CDF’s commitment to working towards fostering safe, trustworthy, accessible and inclusive technologies.

CDF at PrivacyNama 2023

CDF Co-founder Nidhi Sudhan contributed to the panel discussion on “Children and Privacy” on Day 1 of PrivacyNama - an annual conference by MediaNama, a Tech Policy Publication in India. The panel featuring Aparajita Bharti (The Quantum Hub), Uthara Ganesh (Snapchat) Manasa Venkataraman (Tech Policy expert) and Sonali Patankar (Responsible Netism) explored the hurdles and opportunities to online child safety in light of the recently passed Digital Personal Data Protection Act (DPDPA).

CDF is a non-profit, tackling techno-social issues like misinformation,

online harassment, online child sexual abuse, polarisation, data & privacy breaches, cyber fraud, hypertargeting, behaviour manipulation, AI bias,

election engineering etc. We do this through knowledge solutions,

responsible tech advocacy, and good tech collaborations under our goals of

Awareness, Accountability and Action.