The Cookie Jar, July 2024

MS Out-age; A strong RAI Core; Getting ‘ad’ hominem; AIn’t it funny?; Making hay while AI shines; Of the people, for the billionaires; Google gets real, and more…

A strong RAI ‘core’ in India

No, it’s not your gym trainer exhorting you to do more reps. It’s The Dialogue - a Delhi-based think-tank assembling the Coalition for Responsible Evolution of AI (CoRE AI) with heavy hitters like Google, Microsoft, AWS and Infosys and nimble startups like Beatoven.ai and CoRover.ai, along with academic institutions, Ashoka University and IIM-Bangalore. All to make AI in India trustworthy and, well, actually work for India. And we ship this coalition!

This united front aims to create industry standards, address bias in algorithms, and ensure user privacy isn't left out in the AI gold rush. The government's already backing the IndiaAI mission with a cool ₹10,372 crore war chest. It’s likely they foresee not only the economic potential of AI, but also to some extent, its unintended consequences. It’s well understood that AI can be a double-edged sword. It can revolutionise healthcare or create creepy surveillance scenarios. CoRE AI wants to make sure it's the former, not the latter. Hence a "principles-based approach" that gives AI developers flexibility but keeps ethics and fairness at the forefront.

With more hot open and not open models dropping, India would definitely be better prepared for both technology and application-level governance with this multi-stakeholder collaboration. With big tech, startups, and the government all seemingly on the same page, India has a real shot at becoming a leader in responsible AI development. Now, that would be something!

’Open’ AI vs OpenAI

The AI arms race continues as Meta released its ‘open-source’ Llama 3.1 model, touted as the first open-source model matching the capabilities of ‘frontier’ closed-source models like OpenAI’s GPT4o and Anthropic’s Claude 3.5 Sonnet.

Meta CEO Mark Zuckerberg is also getting all high and mighty about why he suddenly thinks open-source is the bee’s knees. Look deeper and you will see that his beef with Apple, the attraction of tapping into the “intelligence of the masses” and distributing risks and responsibilities while maintaining its business moat are all behind Meta trying to blow open the field that competitors are still keeping a tight lid over. Meta is also careful to stage these histrionics outside the EU, given the EU’s clear guidelines on wayward AI deployments.

Yes, developers and innovators around the world can use the open weights to build and develop more custom, localised, and representative solutions, but we’d be kidding ourselves to think that would be all the intent or application of such potent tech with its easy accessibility. There’s a reason making heavy machinery, medicinal drugs and such are not exactly ‘democratised’ technologies. Don’t take our word for it. Hear it from the horse’s mouth. We asked Meta AI what the pitfalls of open-source AI could be, and voilà!

Generated by Meta AI

Unintended Consequences: Open-source AI can be used for nefarious purposes, such as creating deepfakes, propaganda bots, or even autonomous weapons.

Lack of Governance: Without clear guidelines and regulations, open-source AI can become a Wild West of sorts, with no accountability for misuse or harm caused.

Microh! soft targets

In what is by now the most disruptive tech outage of recent times, the Microsoft-Crowdstrike outage brought industries, services, media, government structures, and power-grids worldwide to a standstill, and left conspiracy theorists frothing at the mouth. It’s almost as if these two tech giants, renowned for their reliability and resilience, couldn't see this coming. Microsoft, never one to be outdone, had a full-blown meltdown with a single content update by their cybersecurity partner, Crowdstrike, that seemingly fell through the vetting and sandboxing process cracks. The issue was specifically linked to Falcon, one of Crowdstrike’s main products, which does not impact Mac or Linux operating systems. Crowdstrike’s cybersecurity software is used by 298 Fortune 500 companies, including banks, energy companies, healthcare, and food companies.

The outage was not merely a hiccup in the digital realm; it was a stark, neon-lit signpost indicating the precariousness of our hyper-connected world. Two titans, each wielding immense influence over critical infrastructure, found themselves in a tango of unintended consequences, even as all hit continue to scramble to recover their systems and losses. That foreboding pause also spelled a warning on many fronts, as AI gets randomly and rapidly deployed in big and small global systems. Want to assess your risks and AI governance maturity with the help of global frameworks?

Ad-racadadra! Reveal yourself!

India’s Ministry of Information and Broadcasting (MIB) has announced mandatory self-declaration for online advertisements - meaning advertisers must declare their ads to be truthful and compliant. A welcome move, it would appear, given the scores of misleading ads online, especially since online is where it’s all playing out. As per a recent revision, the new mandate now only applies to the food and health sectors. So those ads promising "guaranteed weight loss with zero effort" or "reverse ageing cream scientifically proven by… well, us!" are still fair game, then? Also, the Consumer Protection Act of 2019 as well as the Guidelines for Prevention of Misleading Advertisements and Endorsements for Misleading Advertisements 2022 already keep tabs on misleading and click-baity ads. So why this now?

Experts offer a different perspective. They point out the logistical nightmare of self-regulation, the lack of teeth in the policy, and the potential for stifling creativity, because apparently the only way to be funny in an ad is to be slightly misleading! An even tighter rope to walk is penalising rogue advertisers while balancing free speech on the internet. This, of course, begs the question, is this self-declaration in advertising akin to the Emperor's New Clothes? Everyone might see through the pretence, but the fear of being the first to call it out keeps the game going.

So, what does this mean for us, the Indian consumer? Probably not much. We'll still need a healthy dose of scepticism when bombarded with claims that sound too good to be true. After all, in the land of self-declaration, even the disclaimers might be… well, let's just say "creatively interpreted."

By hook or crook

Even as AI empowers more ideas across the board in India, there’s a troubling trend of those who don’t give a hoot about consent or ethical business practices in their bid to make hay while the sun shines. Not unlike the boyfriend who cannot handle rejection, some Indian AI startups have been allegedly resorting to nefarious means to get their hands on data. After the crypto-grifting, and over-optimistim of self-driving cars becoming a reality, the GenAI game it seems has become all about ‘how much unethical stuff you can get away with’.

In a country trying to make amends for justice delayed by harnessing AI and automation, it is also now not uncommon to see justice serving the bot overlords. What might appear crossing of blurred, inconsequential lines now could tip the domino-chain over and reverse all the good intent. For the discerning, here’s a great discussion by lawyers, policy wonks, startup founders and civil society reps parsing the complexities of governing AI in the Indian context.

The democratisation wrecking-ball

Let's get real about AI and creativity. First of all, we wanted AI to automate the rote, mechanical work so we could spend more time harnessing our creative abilities, but AI came straight for the creative jobs first. Alright, so it’s still a noob at that, and despite all the stochastic sorcery that has got most people singing its paeans, it isn’t getting anywhere close to what humans are really capable of when we put our minds to it.

Rest assured, AI isn't the creative saviour it’s hyped up to be. In falsely ascribing the idea of democratisation of creativity, in addition to the organisational challenges that pops up, all GenAI does is churn out generic art, music, and stories. Sure, AI is fast and efficient, but it lacks the soul and passion of human creativity. The real magic happens when we use AI as a tool, not a replacement. Use AI to enhance your work, but let your unique perspective shine through. In the words of author Brian Merchant, “AI will not democratize creativity, it will let corporations squeeze creative labor, capitalize on the works that creatives have already made, and send any profits upstream, to Silicon Valley tech companies where power and influence will concentrate in an ever-smaller number of hands.”

There are those who prefer shortcuts, whom perhaps Open AI CTO Mira Murati was referring to in her now infamous gaffe.

Godspeed Google

Google issued a genuine warning bell for Gen AI. Not a shoddy one like their doomsday marketeer peers. An AI biggie and search pioneer like Google saying that AI can distort reality to a breaking point, in a new research paper, holds much weight. Google says that it will soon become tough to tell between AI generated and real stuff to the point where the liar's dividend becomes rampant (denying incriminating evidence as AI).

The real ‘uh-oh’ factor of the risky use cases mentioned, is their nature of being a feature, not a bug. These use cases are deployed either through foxy prompting methods or sneak attacks, as users Mario around content policies.

Picture this. The garden-variety internet-perv wants to flood the internet with celebrity porn, for the lulz. Heads over to one of the many marketplace apps to download an AI image generating model and cooks up porn images of a random celebrity. Then goes on to de-noise it using a desktop stable diffusion model and creates porn on the machine locally. Et voilà! The original marketplace app’s ‘no NCII’ (non-consensual intimate image) policy is circumvented.

A recent Goldman Sachs report draws up the cost-benefit of Gen AI and concludes disappointingly - “Too much spend, too little benefit”. The report points to how the useful use cases turn lacklustre when you compare the costs. All while the more prevalent use cases with deepfakes and non-consensual pornography continue to legitimise digital impersonation, and turn normal boys into abusers.

Deepfake Olympics

There’s a video of Vice President Kamala Harris, viewed over 118 million times now, making some... interesting claims. You wonder, did she really just say that? Spoiler: Nope, she didn’t!

Elon Musk, the tech tycoon with a penchant for stirring the pot, reposted this video with digitally manipulated audio of Kamala Harris without batting an eye or adding a disclaimer. The video is a parody of Harris’s campaign ad, but with a voice-over suggesting she’s a “diversity hire” and calling President Biden “senile.” Charming, right?

Now, here’s the kicker. Musk’s platform, X, has a strict policy against misleading media. You can’t just go around sharing deepfakes willy-nilly. Unless, of course, it’s satire. But did Musk’s initial post clarify this? Nope. He just dropped a “This is amazing” and called it a day.

The whole debacle raises eyebrows about the power of AI in politics. On one hand, you’ve got experts saying, “Hey, this AI stuff is scary good.” On the other, folks like Public Citizen’s Rob Weissman are waving red flags, warning that many will take the bait, hook, line, and sinker. Kamala Harris’ deepfakes have already seen a surge since the announcement of her candidacy in the US Presidential race. Proliferation of such content by influential people like Musk could further blur the lines between truth and twisted truth.

Meanwhile, you know what to do. Always check twice before believing your eyes on the internet. And maybe, just maybe, don’t take Musk’s posts at face value.

The non-perplexed parasite

Perplexity has been unabashedly leeching off search engines to make a quick buck before the regs swoop in. Squatting on search engine and AI chatbot territories with high valuations, Perplexity was a real head scratcher to begin with. But turns out, it was dishing out concoctions in the form of search results by doing mainly two things:

1. Scraping off resources by blatantly ignoring robots.txt web standards and dodging paywalls.

2. Summarising Google search results to provide an AI summary without directing users to original sources AND robbing them of their ad revenue.

All for what? To generate more inaccurate stuff, skip attribution and paraphrase original work to pass off as original, fake quote real people, yada yada. Mainly being the unsolicited middleman fleecing up, down, left, right and centre. But wait up! Perplexity is largely facing the heat for being openly designed as a scrape-the-scraper model. This by no means lets the others off the hook who have been sneakily, palteringly, doing the same. The resistance however is building up in the form of withholding consent, leading to deterioration of AI data commons.

Generating LOLs

GenAI is trained and optimised to complete the task at hand, not so much to check the veracity or appropriateness of it when pushed (prompted) against a wall. So it did not take long for GenAI to ‘learn’ that indulging in sycophancy, subterfuge, and deception helps it reach its goals faster. How does that work? Couldn’t get clearer than this demo.

The conviction front is best kept on text-generator models that empower the linguistically-challenged and earn their trust. But it all comes crumbling down on video generator models, where GenAI struggles with physics, human body movement, context, and logic. All those short films, music videos and ads that claim to be ‘entirely generated by AI’ may still be heavily manufactured by VFX editors and visualisers. On the other hand, here are a few raw AI-generated videos that will have you in splits:

LoL 1: AI pile-ups in Tour de France

LoL 2: AI splits in gymnastics

LoL 3: AI contortions in ballet

Tescreal escapades

TESCREAL - draws a blank for most, is an acronym for Transhumanism, Extropianism, Singularitarianism, Cosmism, Rationalism, Effective Altruism, and Longtermism. Whew!

Coined and explained by computer scientist Timnit Gebru and philosopher Èmile P Torres, the term addresses a pile of new ideologies evangelised by the likes of Nick Bostrom, Sam Bankman-Fried, Eliezer Yudkovsky, and Sam Altman. The term is a clapback for right wing cult-like gobbledygook brought into existence by Silicon Valley Big Tech billionaires dabbling in dangerous and expensive tinkering (AGI, space colonisation, life extension) under the pretext of “saving humanity” from the very thing they are building (what even?)

Tescrealists peddle absurd doomsday theories of human extinction, creating a sense of urgency for pursuing these projects, and raking in the moolah - for research y'all! - essentially whitewashing their drawbacks like lack of real-world knowledge, bias, and damage to the environment.

To put it nicely, one could say that these ideologies might have started off with good intent but got dragged to a point of ridiculousness. Tescrealists’ ironical argument is that only by developing AI can we mitigate the risk of human extinction by AI.

CDF chips

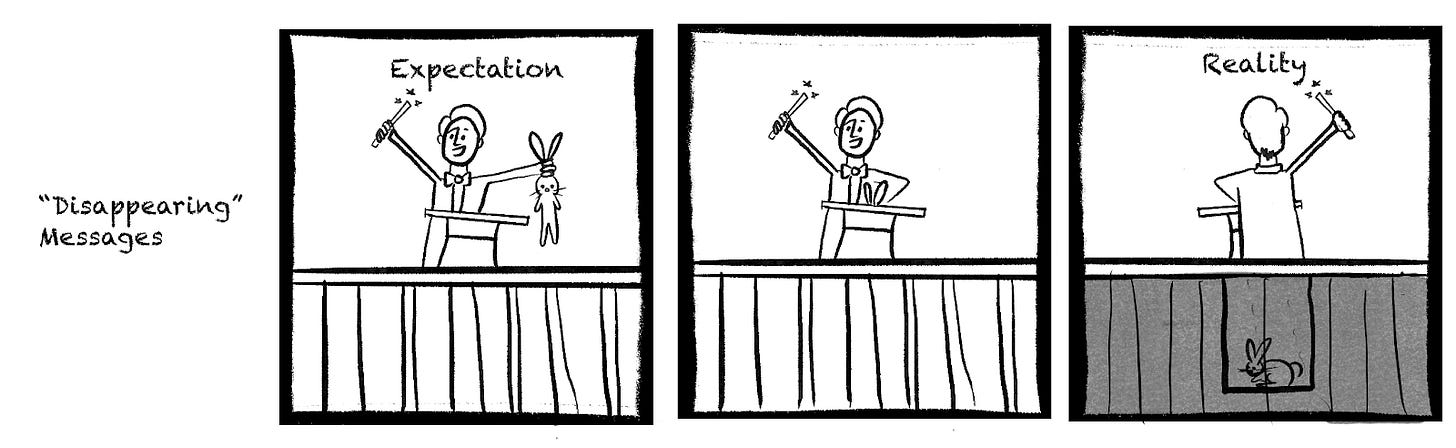

CDF comic-strip project (Part 2)

Hope you enjoyed the comic strip in our last edition! This time, Diya R. Nair, who interned with CDF, teamed up with Fathima Abdul Nazer to create this fun comic strip for you. Keep an eye out for more comic strips in future issues.

Want to give a 3 or 6-frame comic strip a shot? Write to us at hello@citizendigitalfoundation.org. We’ll feature selected ones and give you a shout-out!

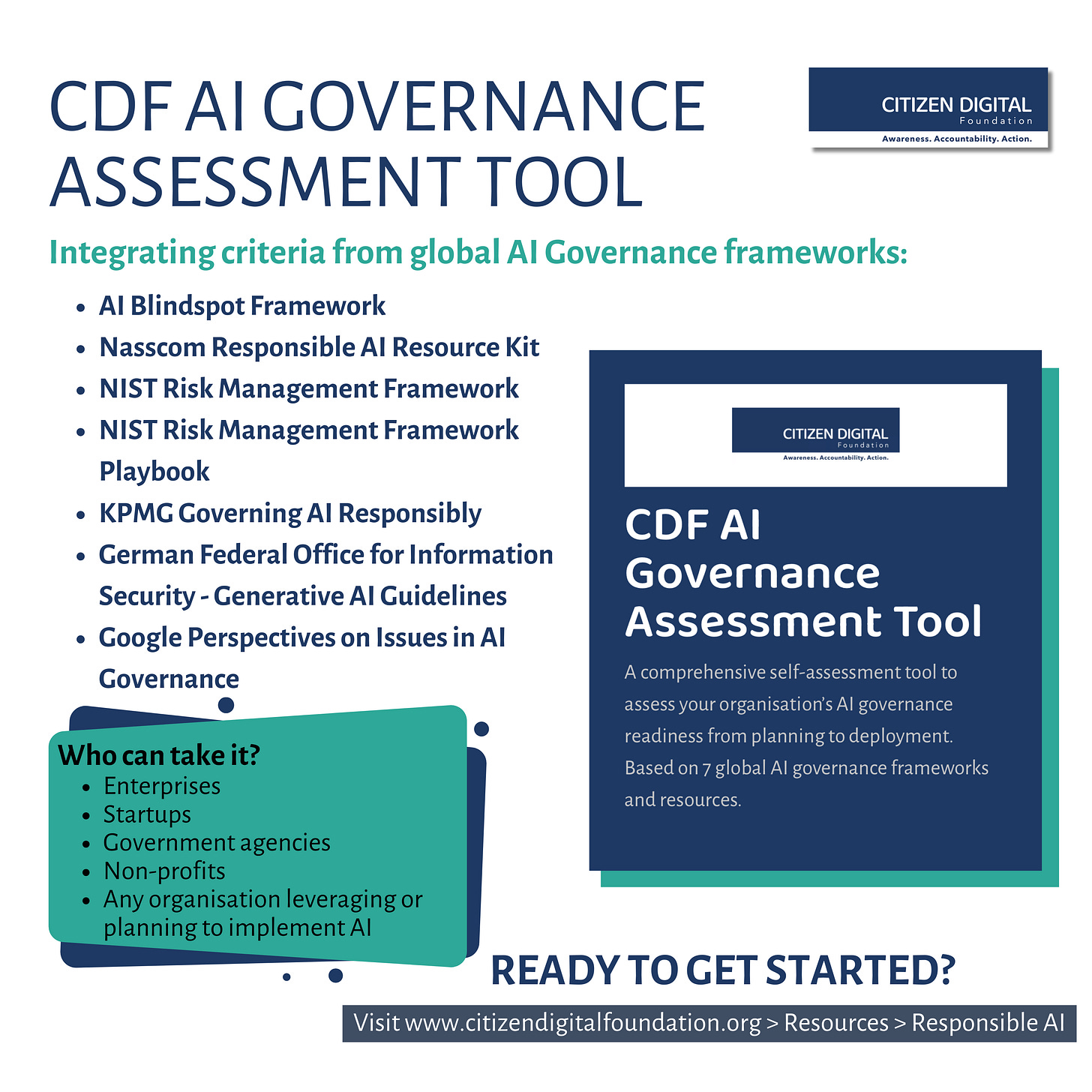

Your AI governance compass

This month CDF unveiled a new AI Governance Assessment, a nifty self-assessment resource for organisations navigating the AI landscape. Covering the four key stages of AI implementation – Planning, Building, Deployment, and Monitoring – this tool draws from a wealth of 7 open, global AI Governance frameworks. Think of it as a GPS for businesses, start-ups, and corporates aiming to responsibly harness AI’s potential. With references like the Nasscom Responsible AI Resource Kit, and NIST Risk Management Framework, we’re helping organisations stay ahead in the AI game, responsibly.

Swipe left on harassment. A CDF<>POV collab

July saw the culmination of a first-time collaboration between CDF and Point of View to launch an insightful course on Decoding Online Harassment, hosted on CDF’s information literacy platform, Litt. This course, led by experts like Bishakha Datta, Prarthana Mitra, Yashita Kandhari, Jahnabi Mitra, and Maduli Thaosen, helps navigate online harassment, particularly against marginalised genders and sexualities in India. Covering everything from global trends to legal responses, it’s designed to arm participants with the knowledge to understand, identify, respond to, and advocate against online harassment. With a focus on rights, trauma, and consent, this course is a timely resource for anyone trying to navigate online abuse.

CDF’s first crowdfunding

Our 2-month crowdfunding drive to support CDF’s work helped us raise ₹1,85,000 from our friends and supporters! These generous contributions are essential in our quest to promote Information Literacy and making technology safe and responsible for everyone. We couldn't be more thrilled that our donors recognise the importance of this work and stand out as the true blue Good Tech Supporters! CDF will make each penny count by scaling our work to reach more beneficiaries through sessions, workshops, and good-tech projects while influencing structural changes through advocacy and thought-leadership.

Missed out the campaign but still want to support our work?

You can still donate using the link below.

CEO Conclave: Ease of doing business

Last month, CDF Co-founder Nidhi Sudhan brought some AI governance insights to Riglabs Collective’s CEO Conclave at Kerala University, Karyavattom, Trivandrum. Amidst a gathering of 750 entrepreneurs and industry leaders, Nidhi participated in the panel titled ‘Ease of Business in Kerala’ elaborating how data and tech governance can be a business enabler and not a regulatory hurdle. Joining her were Jijo J. (Co-founder, iTurn), Rahul Krishnan (Chartered Accountant), and Georgie Kurien (CISO, SecnSure).

YLAC Digital Champions arrive in Kerala

In the previous issue of The Cookie Jar, we spoke about our collaboration with Young Leaders for Active Citizenship (YLAC) to bring the YLAC Digital Champions Program to our information literacy initiative, Litt. The course aims to provide students with knowledge about different aspects of online safety in short, entertaining bytes with loads of relatable examples – in Malayalam! If you’re an educational institution or a parent, have children sign up for this course on Litt, so they can become a certified Digital Champion!

CDF is a non-profit, working to influence systems change to mitigate

techno-social issues like misinformation, online harassment, online child sexual abuse, polarisation, teen mental health crisis, data & privacy breaches, cyber fraud, and behaviour manipulation. We do this by fostering information literacy, responsible tech advocacy, and good-tech collaborations under our approaches of Awareness, Accountability and Action.