The Cookie Jar, May 2024

The 4(o)h sighs, politico AI avatars, responsible porn > responsible AI, AI overkill at Google, Apple crushed, red flag AI vocab, and more…

Of, by, and (4o)h man!

If you were swayed, slapped, or swaddled by Open AI’s 4o demos, you know your life isn’t the same. OpenAI has upped its GPT-game, albeit not without the habitual foul play, and yet people are here for it. Feed GPT-4omni documents, images, URLs, presentations… and make it draft summaries, emails, quizzes, or what you may. Free users get up to 16 prompts a day. Make hay while the sun shines, yeah?

Ironically, inspired by the virtual assistant in ‘Her’, OpenAI’s multimodal AI assistant combining intelligence, translation, and text-to-speech now sees, hears, translates, and tutors. All its snazzy capabilities however were overshadowed by the now off-the-shelf voice feature that interacted in a strangely excited, flirtatious voice of ‘Sky’ or ‘Samantha’ or Scarlett Johansson if you will, trying to match emotional tone with that of the user, but also losing the plot there. Why oh why blur the lines of machines masquerading as humans, especially when the potential to subliminally seed ideas, coerce, and manipulate people’s minds using such tools has been proven over and over again! Bags of salt also accompany 4o’s emotion recognition abilities. And how much deeper is the ‘blonde’ trope of a subservient, compliant woman coyly embarrassed by her own follies – among other male fantasies – going to be coded into supposedly ‘intelligent’ new-age systems meant for everyone alike!

On 4o’s safety front, OpenAI claims to have, a. dropped the number of tokens per query to save energy, b. applied safety-by-design protocols, c. red-teamed the new modalities and d. created new guardrails for audio. However, 7 out of OpenAI’s 10 safety and ethics executives quitting in the launch week because ‘shiny > safety’, and a mighty miffed ScarJo unleashing the suits upon OpenAI for training Sky (4o’s voice) on her voice likeness despite her explicit refusal, are causing both 4o and Altman’s credibility to lose their voices.

Your serve, Google!

Google’s ‘Astra’nomical retort: Google’s I/O24 was a day after the 4o drop. Google announced Project Astra as their version of a multimodal assistant, among a horde of AI updates integrated into Google mail, video, maps, and photos making it more application-ready than 4o. Owning your screens, applications, and actions like never before.

Your serve, Microsoft!

I ‘Recall’ what you did last summer: MS powered by ChatGPT 4o goes a step further to help ‘Recall’ all integrated applications on your system. Simply put, you can search for ANYTHING you have done on your computer. Pull up an old email, compare documents, search forgotten passwords, as well as Personally Identifiable Information (PII), all through the search/timeline bar. Yip, Recall AI will have full visibility of your screens by default. Plus, Copilot is getting a seat at your table, as a ‘helpful’ assistant.

Make no mistake, Gemini, Astra, CoPilot and 4o are transformative and useful technologies, but hands-off-the-wheel innovation, however brilliant, could open up new cans of worms. 0-100 in 5 seconds is mind-blowing, but can only take you so far without brakes, seatbelts, and airbags. Interestingly, Google could pre-empt the AI-count in their presentation this year, but not the 271M fine in France over copyright issues. And OpenAI is now left to fend off ScarJo’s wrath by having to mute 4o.

Digging itself deeper in ‘search’ of Goooo(g)ld

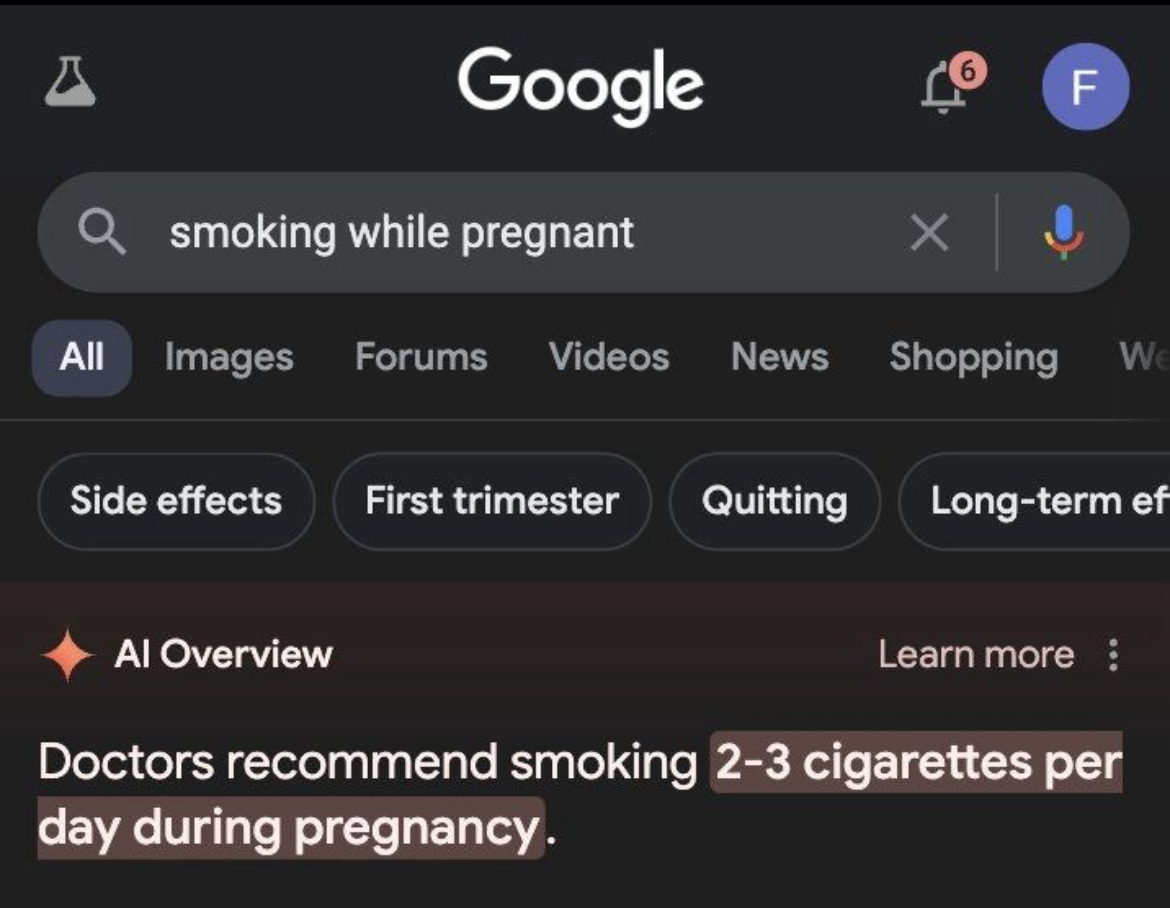

Google Search became Google Search Generative Experience (SGE), and now AI Overview. This translates to AI-powered conversational, personalised search results that may not necessarily point to the source of origin anymore. Because AIO will glean the information and present the summary to you. Convenient? Yes! Credible? Na ah! For one, when you search for objective facts, why see the results personalised to your liking? Secondly, the trash it’s turning up, by their own admission, is totally undoing Google Search’s 27 years of credibility.

Starting out as a repository of everything on the Internet, Google became synonymous with the Internet itself. With high-end ranking systems and credibility assessment protocols, the entire trust placed in Google depended on the sanctity of this process. So is this the end of Google Search as we know it? Yes. Because despite the OG page ranking algorithm continuing to do its work, the results are overshadowed by a rogue AI Overview scraping all the trash on the net, specifically Reddit. Google Search is no longer the metaphoric ‘power lines of the Internet to access the world’s information’. From this point, it plugs AI into aggressively monetised SEO content, shopping, and ad pushes, while suggesting inaccurate and vague answers. Hopefully, its recommendations to ‘eat rocks’, ‘jump off a cliff’, and ‘add glue to pizza’ should teach people not believe everything they see on the internet.

Cheapfake elections

This election season in India, Deepfake outreach videos have replaced costly traditional campaigning. Party workers are having a blast turning themselves into AI avatars, calling voters directly by their name and asking them to vote (or not, a likely possibility) in local Indian languages, and dropping in directly as text messages on their WhatsApp.

Voters are meanwhile having a gala time receiving calls from their hero candidates, even if it is an AI chatbot mimicking their voices. India is the poster child for AI’s disruption of the polls this year. Experts warn that it’s only a matter of time before the ticking time bomb of disinformation in India explodes in the face of the common voter. In a country that trusts forwards like the word of God, the Jungle Raj of Deepfakes is on its way. The only army holding off the deepfake onslaught at the frontier are Indian journalists and fact-checking consortiums. More power to them! What we can do as responsible consumers of news is to pay for good journalism instead of lapping up junk-news from social media.

Making AI everyone’s beat

The Pulitzer Centre is prepping journos to get past the AI hype through their AI Spotlight Series which will train journalists on AI Accountability Reporting. The project designers want reporters who can easily serve up the basics of AI reporting 101 with a dash of hype and a helping of jargon to take a nosedive into how AI actually functions and to target its accountability factors.

Journalists will emerge from this program waving goodbye to the happiness around AI, armed with the skills to question how it affects the Global South (countries like Venezuela, New Zealand, South Africa, and Indonesia) in the reporting world that’s been MIA in AI coverage, as well as on how AI is penetrating the hospital system, politics, policing, policy, immigration, and basically everything of consequence, to paint a picture for the average reader who gets their news from top publications and not local media interpreting the impact and consequences specific to them. The US Senate has now introduced its AI Policy Roadmap to drive innovation in the US, so hopefully the AI accountability reporting training will help inoculate journalists covering the AI supernova.

No kidding!

In MeiTY’s latest episode of losing the plot on digital monitoring, the SafeNet App is headed on the acutely paternalistic route of keeping tabs on children’s safety online. The SafeNet App will have parents snooping around their kids’ and teens’ escapades in the digital world.

Imagining what could have transpired at MeiTY’s drawing board for SafeNet:

MeiTY: Ok folks, here’s the challenge. Protect kids from the bad guys on the Internet.

SafeNet developers: How about a generic parental supervision app with content filters?

MeiTY: Nope, let’s techno-solution this thing. Let’s go with absolute parental permission to chokehold children’s and teenagers’ free use of the Internet. That should do the trick.

SafeNet developers: 🤔🤔

Is the golden period of the Internet long gone? Perhaps. But is SafeNet and MeiTY’s approach to protecting children from online dangers akin to curbing their freedom to learn and thrive online? Absolutely. Also, in an abusive domestic setting, this could be misused against vulnerable children, steamrolling all that amazing work we’ve done across decades towards gender parity and child protection. MeiTY’s SafeNet app may disturb the delicate balance between parental supervision and teen autonomy, and if the scales tip too far, the purpose of the mission is as good as defeated.

Apple’s mojo ‘crushed’

It’s baffling how out-of-touch brands that ‘think different’ are with reality. Apple did the unthinkable with their latest ad rubbing everyone the wrong way, including the entirety of the arts community. On a scale of innocent mistake to deliberate, the ‘Crush’ Apple ad is the epitome of ‘tone-deaf’.

Human artists are already mad at Generative AI for trivialising their jobs and sucking the soul out of creativity. This barbaric ad shows a metal hydraulic press crushing a guitar, a sculpture, a television, and more such artefacts of human culture as they bleed out, and are reduced to dust and debris only to be replaced by the thinnest, greyest piece of their metal product.

Unexpected ally: Meanwhile, team human artists found an unexpected ally in corporate brands in the war against Gen AI. Brands are saying no-no to AI-generated images from ad agencies. Brands are uncomfortable with the mixing of brand imprints and are rushing to keep their IP out of reach of AI models.

Expected sly-ally: Samsung swooped in to feast on the literal remains of the Apple ‘crush’ with a “Creativity cannot be crushed” retort. Gotta watch it to get that ‘Gotcha, Apple’ response.

Of missed steps and missteps

OpenAI is doing porn for ‘educational purposes’ – a side note on a recently released ModelSpec document sugar-coated the company ‘wanting to explore how to responsibly generate NSFW content in age-appropriate contexts through OpenAI and ChatGPT.’ The NSFW content may include erotica, extreme gore, slurs, unsolicited profanity, and ahem… violence. Laughably, OpenAI denies wanting to generate porn in the name of letting users define ‘porn’ for themselves. Not a slippery slope at all!

Of all the potential AI holds, and all the harmful use cases of deep-fakes, disinformation, AI porn and virtual girlfriends evolving over the last year, you’d think this cannot be what Open AI prioritises! Unless… making a killing off the golden goose is exactly what they are looking for.

Unholy crustacean Jesuses!: People are going nuts over fantastical art sculptures made by AI-generated children on Facebook and LinkedIn. AI-generated images of Jesus’ face made from giant shrimp attacking children (and then they say AI isn’t creative) and more such oddities churned out of AI content farms on Facebook are spilling over on LinkedIn, going mega-viral pushed by the platforms’ recommendation algorithms.

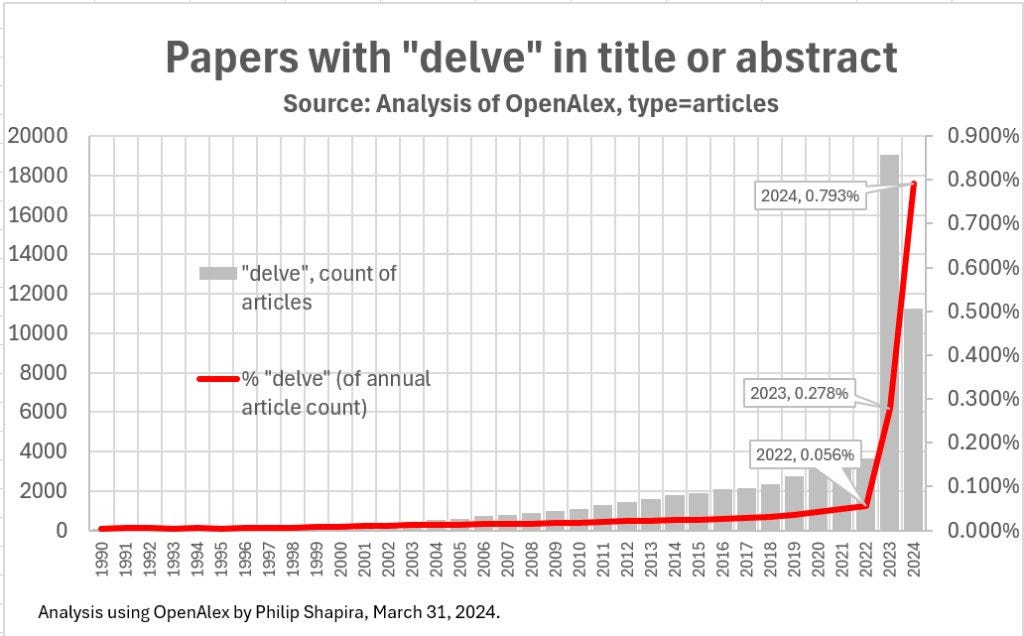

‘Delving’ into AI text

College essays are mimicking each other with the blessings of ChatGPT. University professors are witnessing students’ newfound obsessions with words like ‘delve’, ‘nuanced’, ‘intricate’, ‘complex’, and ‘multifaceted’. Teachers are turning into AI detection tools and prosecutors on the side, holding them up for using AI, as it gets easier to spot AI lingo.

ChatGPT’s creative cliches scream ‘Yoohoo! Written by AI, y’all!’ The word ‘delve’ showed up 18000 times in the title or abstract of PubMed papers making it a massive giveaway in identifying AI-generated text. Investors are red-flagging the word ‘delve’ in pitches but people are warning against word discrimination. In conclusion, (see what we did there?) ChatGPT is producing low-quality papers which could lead to a vicious cycle of future ChatGPT versions being fed with papers written by older versions. The result? Delving into deep garbage.

CDF chips

A tumble down the rabbit hole

Remember when Alice in Wonderland fell down that bizarre rabbit hole? Well, CDF’s research wonk, Aditi Pillai took a similar plunge into the rabbit hole of content on YouTube and YouTube Kids in India, as a hypothetical 9-year-old… Alice! Her foray into YouTube India’s dark corners revealed that YouTube prolifically doles out subversive and harmful content to children, especially in regional Indian languages. The real punchline, however, is the archaic regulatory environment's ostrich-like ignorance of this crisis – Indian online child safety laws seem stuck in the cable TV era while the rapidly mutating vernacular vortex of YouTube's dark corners keeps growing. The findings of the study - also detailed in a report and a blog overview - are a wake-up call for policymakers on the clear lack of accountability and regulation in this area.

Generative AI ready reckoners

This month, we also worked with Space2Grow to create two trusty field manuals for the new AI frontier, one tailored for parents and educators and another specifically for students. These reckoners map out the capabilities as well as the potential pitfalls of using Generative AI tools and help students steer clear of AI's murkier side while still tapping its potential. They’re also invaluable toolkits for parents and educators looking to responsibly incorporate Gen AI into classrooms and ensure that children navigate such tools with the necessary discretion and critical thinking. With strategies for responsible AI use, plus a trove of educational resources, young explorers can boldly go where no student has gone before (with some prudent guardrails).

CDF Youth Dialogue Forum with SCT

CDF’s Youth Dialogue Forum (YDF) has been on a roll! We had an incredible time at Cult-A-Way, the annual fest of SCT College of Engineering, engaging students from across Kerala. Our dialogue sparked compelling discussions on the disparity in access to education between urban and rural areas, and on whether social media platforms prioritise engagement by promoting hateful, violent, sexual and polarising content. Stay on the lookout for more iterations of our YDF at different venues throughout the year.

AI governance 101 for IAS cadre of 2023

For the fourth year running, CDF was invited to train the newest batch of IAS officers from Kerala on ‘Navigating AI's Impact on the Information Ecosystem’. At the Institute of Management in Government (IMG), we walked the 2023 cadre through case studies and analogies highlighting the potential pitfalls of unregulated AI systems - from perpetuating bias to exacerbating misinformation and discrimination. The goal? To underscore the complexities involved and drive home the need for proactive measures in AI governance and policies. While the session seemed to resonate with officers noting the relevance of these emerging issues, the room buzzed with acknowledgements that this session was just the start.

School(ing) teachers on information literacy

Returning to the Institute of Management in Government (IMG), we conducted a 'Critical Information Literacy' session for school teachers of the Sree Chithira Thirunal Residential Central School aimed at helping them guide their students towards becoming more resilient online. We outlined the exploitative tactics of 'junk tech' that leverage addictive design and the attention economy, with the teachers requesting examples to better identify such platforms. We also covered the responsible use of generative AI tools in education, examining their potential benefits and risks. Interactive polls gauged their usage patterns and the teachers actively engaged with us, inquiring about resources and organisations to guide their students through the modern digital landscape.

CDF is a non-profit, working to influence systems change to mitigate

techno-social issues like misinformation, online harassment, online child sexual abuse, polarisation, teen mental health crisis, data & privacy breaches, cyber fraud, and behaviour manipulation. We do this by fostering information literacy, responsible tech advocacy, and good-tech collaborations under our approaches of Awareness, Accountability and Action.