The Cookie Jar, June 2024

Apple Inteleaking; Yellow card for social media; AirTag stalking horses; Quora ‘bot’tled; Triple X; Till Kling-dom come; and more…

Not the Apple of our AI-s

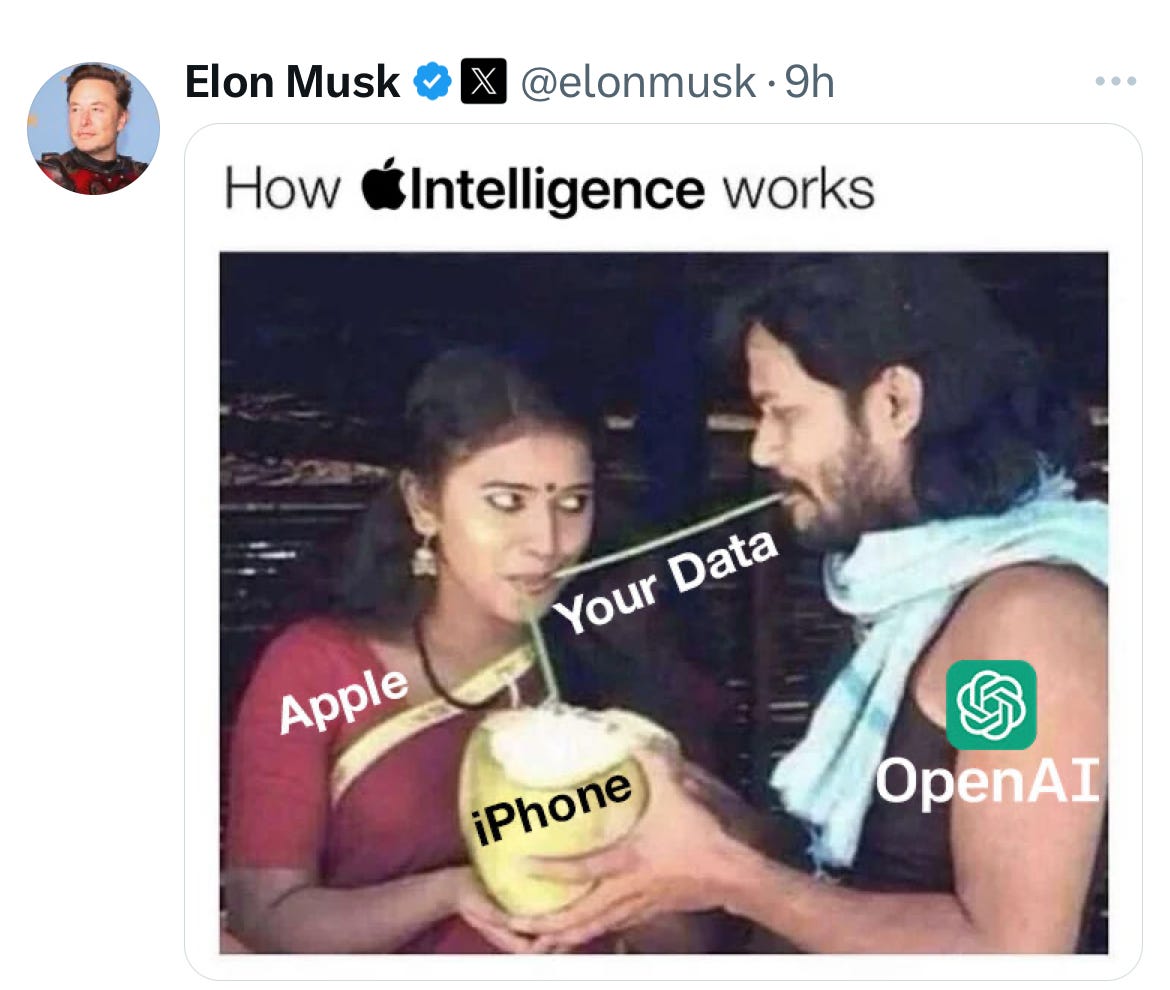

We aren’t big on Musk-rants, but this Musk-meme hit home and is fuelling some flames.

Apple unveiled Apple Intelligence, their suite of AI features (a family of AI models), and sorta kinda plugged ChatGPT into their services whether you like it or not. Apple claims that certain complex prompts would be redirected to ChatGPT, without sharing user IPs or storing the requests! Are you sure? Errm… ‘Hey look! Free ChatGPT for all Apple users!’

How is Apple Intelligence different?

AI Siri will play you a playlist texted by your bae once. Good luck not throwing your phone out with the breakup!

Auto replies to emails with inline suggestions (Android and Windows got there first).

Make movies out of your photo gallery (again, Android aced this).

Oh, here’s a weird one. Genmojis – text prompts to emojis.

Check your flight status and tell you when it's time to step on it.

Sherlock some info you’ve lost, from one of your apps.

Claiming the AI acronym may be clever but so far nothing to write home about.

WARNING! Keep away from children

It’s official! Social media is a mental health hazard for teenagers. And platforms may soon bear this cigarette box-like label. US Surgeon General Vivek Murthy called for the warning label on social products following his 2023 advisory in which he called kids’ deteriorating mental health a public health crisis.

Tech companies are already getting battle-ready with such a warning label’s implications on free speech, and are waving the social connection flag in the Surgeon General’s face. Frankly speaking, adults also need a warning label and should really shift the auto-focus from teens to their own toxic relationship with technology. Where do the kids get it from, you think?

From under the cops’ noses

Tamil Nadu police’s Facial Recognition Technology (FRT) suffered a gaping leak compromising 8 lakh data points of about 50,000 accused individuals.

Here’s what we can take away from this incident:

Facial data in the hands of law enforcement – not secure!

Nobody’s keeping tabs on its use.

The technology is inaccurate.

The risks are real as day (discrimination, targeted policing, disenfranchisement), given the history of the Indian police force.

Surveillance tech and Indian police - pineapples on pizza.

We’ll tell you why this is a larger problem.

This leak not only compromised accused individuals’ personal information but also sensitive law enforcement information including police officer contact details (IPS officers too), police station, police ID data, FIR data, and alert data, which went up for sale in a hackers forum on the dark web for $2-3. The sub-standard data security (or lack thereof) of FRT can lead to scams, hoax police calls, and other illegal activities.

No AirTagging baby reindeer

Stalkers are weaponising AirTags, but they are also proving to be double-edged swords, as one Russian dude from a human smuggling network discovered when he stuck AirTags all over his wife’s car to stalk her in the US and ended up getting netted by the FBI with help from the wife who used the same AirTags to turn him over.

AirTags have so far proven to be women-unfriendly and are now the go-to tracking device of choice for stalkers and abusers. Apple’s attempts to dismiss a class-action lawsuit (a few dozen plaintiffs sued them for AirTag stalking) were shot down by a judge. Apple’s attempt at putting a band-aid on the stalking issue isn’t panning out well and it appears Apple can’t do anything about the third-party misuse of AirTags.

The proof in the pudding

Frontier AI companies are chasing businesses like mad bulls, binning safety, and forcing NDAs down ex-employees’ throats.

This open letter by mostly former Open AI employees warned about human extinction, accusing companies of throwing big money to shroud AI risks and muscling ex-employees into keeping mum. Taking a hint from peers who went public and faced backlash, they are left constantly looking over their shoulders before voicing concerns.

Flashback to when OpenAI staff raised the alarm about a ‘superintelligence breakthrough’ called Artificial Generative Intelligence (AGI) dubbed Q* (Q star) that could ‘threaten humanity’ because it could do maths. Ever since Microsoft’s Satya Nadella secured his seat on the board of directors at Open AI, it's evident that for-profit won’t be compromised for non-profit. Business has taken the driver’s seat while safety concerns suffer the back burner.

That’s what he said: Now estranged Open AI co-founder and chief nerd Ilya Sutskever is promising the world Safe Superintelligence - ‘super-intelligence’ a.k.a AI that can surpass human capabilities on all fronts. Ilya is claiming to make safety part of the main course, than serve it as a side. So, is Safe Superintelligence an oxymoron or a tech utopia? Either way it appears everything that will apparently decide the shape of humanity’s future is still a game of ping-pong among the tech-bros with limited world-view.

Adobe also ‘Slack’ing

Adobe is holding users’ files for ransom to get them to accept their new ‘Terms of Use’. Adobe wants Photoshop users to grant them a “non-exclusive, worldwide, royalty-free sublicensable licence, to use...” their data to train their AI models. And that’s just a portion of a new paragraph in their Terms of Use. Users are getting locked out of Photoshop before they can uninstall the app, cancel their subscription, or even retrieve their files.

Turns out, Slack was sneakily opting users into their policy change without any heads-up whatsoever, as users discovered that messages were being gobbled up for AI training.

Now suppose an organisation goes easy on companies tricking their employees into handing over their data via tools like Slack and Photoshop, this will set a bad example for other AI companies to pull a fast one on unsuspecting professionals. Little surprise then, that high-profile creative professionals are cancelling Adobe.

If AI companies like Adobe think that creative professionals and designers still go TL;DR on data privacy terms of use, nuh-uh, my friend.

Internet’s lost de(Quora)m

One close look at the top answer on a Quora question, and it's from an AI bot. Bot answers are elbowing their way through Facebook groups, and online communities, where people come seeking fellow humans.

Meta AI recently made up a story about its imaginary gifted, disabled child, fake-replying a parent on a Facebook group. People flock to forums seeking security, sympathy, and human connection. If we wanted a robotic answer, we could just ChatGPT it. Funnily enough, chatbot answers are being top-ranked on questions instead of genuine responses from real people.

If more chatbot answers start showing up instead of real people’s answers, the credibility of online communities could diminish. Chatbots butting into forums takes away the trust and critical human approach to forums; the online community context is not for Gen AI, but for human experiential content.

Klinging to runaway dream machines

AI video generation tools are one-upping each other, yet have a long way to go. Here are 5 scenes generated by Luma’s Dream Machine that make us think so:

Video of a child running towards an ice cream truck that kinda looks like they’re about to be run over by said ice cream truck.

Doesn’t fully get the concept of real-world physics.

Spinning ballet dancer oddly sprouts a third leg.

Spacesuit cat doesn't do a good job of dancing on the moon (trouble with interpreting motion).

People merging into each other’s bodies (warping).

Whoever ooh’d and aah’d at AI-generated videos surely aren't feeling too well. The whole pack of ‘em (Sora, China’s Kling, Runway, Veo,..) is waitlisting users and toeing the line to public use, to safeguard against the obvious risks.

On the other hand, these apps are busy in their own little private ‘my horse is bigger than yours’ as they get ready for commercial use, with the exception of Luma’s Dream Machine which arrived on the scene recently, and is miles ahead of the others, open access, and users are already dabbling with it online.

And although there’s no saying what videos they've been trained on, the release of these apps is heralding a new chapter in the era of AI video generation. But mind you, they still are a long way from being safe for commercial use or for elbowing Hollywood outta the way.

X for XXX

Musk is also now on board for making big bucks from the bangs. X has waved the green signal for users to share consensual adult nudity or sexual behaviour (basically including AI-generated porn) as long as it's “properly labelled”. That’s like saying ‘You can post porn, but just don’t call it a sermon’. Funnily enough, the only way they are ensuring under-18ers don’t see this stuff is by checking the birth date on their profile. Very efficient!

Last month we talked about how Open AI wants to ‘responsibly generate porn’ (Of missed steps and missteps). Now here’s what the experts are saying about X’s announcement. How is X to decide what’s consensual and isn’t, and what means do they have to combat the spread of non-consensual deepfakes anyway? Especially in light of X’s abysmal moderation ratio (ironically the smallest content moderation team of any social platform). Be prepared for X to live up to the name and erase all memories you had of the erstwhile Twitter.

Smooth operators. Bad moderators.

Considering the chaos on X every day, they unfortunately have the lowest moderation staff, especially after the 2022 firing spree. Instead, the ‘Community Notes’ fact-check programme is supposed to make up for the lack of content moderators.

CDF chips

CDF launches comic strip project!

Last month, Diya R. Nair (Left) who worked with us as a Programmes Intern for a month, and Marika Conil (Right) a freelance Designer, got together to create comic strips to communicate various messages of CDF’s work. Watch out for more comic strips in the coming issues. If you would like to give a 3 or 6-frame comic strip a shot, on what you feel about online challenges, then share it with us at hello@citizendigitalfoundation.org, and we will feature selected ones here, and give you a shout!

With Alice, down the rabbit hole

In the previous issue of The Cookie Jar, we spoke about how we sent Alice down a twisted rabbit hole of content on YouTube and YouTube Kids. This month, we decided to take things up a notch and pick the brains of industry experts advocating for the safety of children and other vulnerable groups in India. In an insightful webinar on online child safety – ‘With Alice, down the rabbit hole’ – we presented the key findings of our study ‘The YouTube Rabbit Hole’. We also had Ms. Dhanya Krishna Kumar chip in with her insights from a parent’s perspective, followed by a discussion with Ms. Chitra Iyer (Co-founder and CEO, Space2Grow), Ms. Arnika Singh (Co-founder, Social & Media Matters), Ms. Aparajita Bharti (Co-Founder, The Quantum Hub & Young Leaders for Active Citizenship) and Ms. Nivedita Krishna (Founder Director, Pacta), moderated by CDF co-founder Nidhi Sudhan, on the status quo and policy approaches to online safety in India. The recommendations put forth in the discussion have also been compiled into a report.

YLAC Digital Champions arrive in Kerala

We collaborated with Young Leaders for Active Citizenship (YLAC) to bring the YLAC Digital Champions Programme to our information literacy initiative Litt. The course aims to provide students with knowledge about different aspects of online safety in short, entertaining and relatable bytes – in Malayalam! Take the course on Litt to earn a certificate and become a Digital Champion today!

CDF is a non-profit, working to influence systems change to mitigate

techno-social issues like misinformation, online harassment, online child sexual abuse, polarisation, teen mental health crisis, data & privacy breaches, cyber fraud, and behaviour manipulation. We do this by fostering information literacy, responsible tech advocacy, and good-tech collaborations under our approaches of Awareness, Accountability and Action.